Generating Users’ Desired Face Image Using the Conditional Generative Adversarial Network and Relevance Feedback

Caie XU, Ying TANG, Masahiro TOYOURA Jiayi XU, Xiaoyang MAO

Abstract

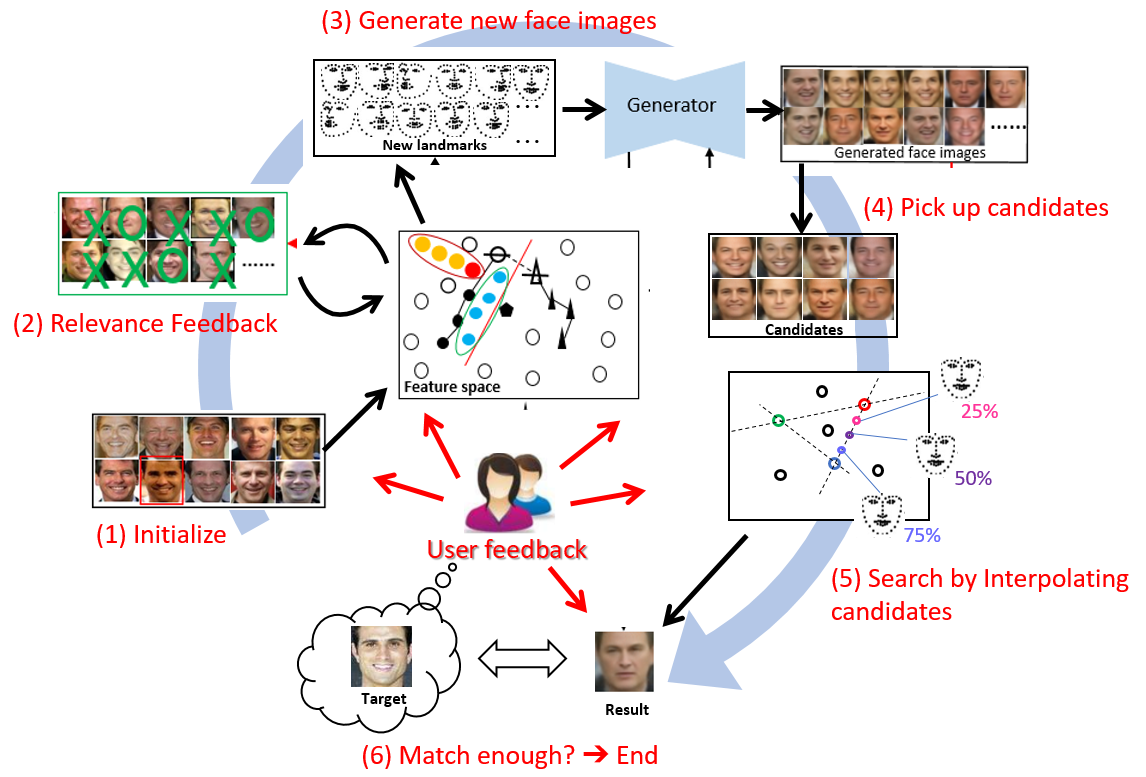

Face image synthesis has potential applications in public safety, such as video surveillance and law enforcement. For example, creating a portrait of a suspect from an eyewitness can greatly help the police identify criminals. Firstly, we developed a user-friendly system that can synthesize a satisfying facial image in the user’s mind through a dialogic approach. But this way can only generate grayscale images and sometimes the created image is blurring. So, then we propose improved method for generating an image of the target face by using the generative adversarial network (GAN) and relevance feedback.

Combining GAN with relevance feedback compensates for the lack of user intervention in GAN and the low image quality in traditional methods. The feature points of face images, namely, the landmarks, are used as the conditional information for GAN to control the detailed features of the generated face image. An optimum-path forest classifier is applied to categorize the relevance of training images based on the user’s feedback so that the user can quickly retrieve the training images that are most similar to the target face. The retrieved training images are then used to create a new landmark for synthesizing the target face image. The experimental results showed that users can generate images of their desired faces, including the faces in their memory, with a reasonable number of iterations, therefore demonstrating the potential of applying the proposed method for forensic purposes, such as creating the face images of criminals based on the memories of witnesses or victims.

Links

Paper: [PDF]

Citation

Caie Xu, Ying Tang, Masahiro Toyoura, Jiayi Xu, Xiaoyang Mao, “Generating Users’ Desired Face Image Using the Conditional Generative Adversarial Network and Relevance Feedback,” IEEE Access, vol. 7, pp. 181458-181468, 2019.

Acknowledgements

This work was supported in part by the JAPAN JSPS KAKENHI under Grant 17H00737 and Grant 17H00738, and in part by the Natural Science Foundation of Zhejiang Province, China, under Grant LGF18F020015.