Abstract

Currently, in Japan, the supply of domestically grown onions decreases in the summer season, so during this period, Japan relies on imports. To address this reliance on imports, there are high expectations for the expansion of onion cultivation in the Tohoku region, where the harvest period falls in the summer. However, a major issue for onion farmers in the Tohoku region is the lack of sufficient instructors and the high travel costs to visit the fields, which prevents them from receiving timely technical guidance. To solve this problem, there is a demand for AR remote assistance apps that can provide “accurate” and “real-time” technical support.

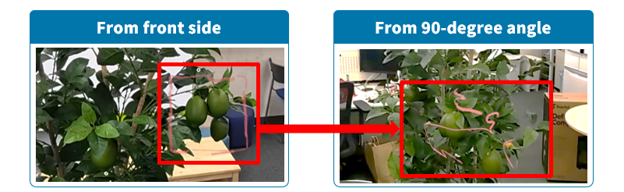

AR remote guidance is a system where workers wearing AR glasses and instructors in remote locations can share real-time video and audio and communicate using “annotations,” which are essentially drawings. This system allows instructors to reduce travel costs and provide guidance to more farmers. In the official Remote Assist app for the AR glasses HoloLens2, for example, when viewing annotations from the front side, as shown in the left image, you can recognize what is being pointed out. However, when viewed from an angle other than the front side, as shown in the right image, the annotations become distorted, making it difficult to identify the target object.

In agriculture, it is often necessary to view objects from various angles to identify diseases, for example, so the existing display methods can interrupt communication with the workers.

Therefore, a 3D annotation display method for AR remote assistance apps has been proposed. This method ensures that the target object is easily recognized even when viewed from angles other than the front side, ensuring that workers can understand the target object “accurately” and “clearly”.

Methodology

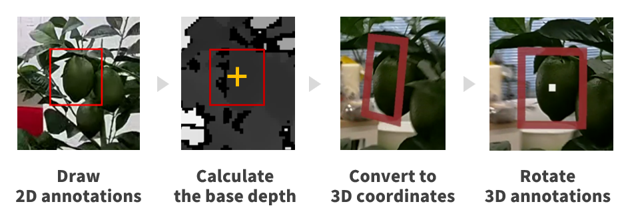

In the proposed method, we achieved our goal by implementing the following four steps:

- Draw 2D annotations.

- Calculate the base depth.

- Convert 2D annotations to 3D coordinates using the base depth information.

- Rotate the 3D annotations according to the user’s position.